In many databases that drive application behavior there exist a table made up of name/value pairs that’s used to store configuration data. This is maintenance data that doesn’t typically change, but it changes often enough not to be stored as a data structure in compiled code. Over time a table like this begins take on a life of its own.

Maybe it starts out, for example, containing name/value pairs for specific web service URLs, but as time progresses we start to see some stranger things in the value column. Perhaps after a year or two there are comma separated lists of phone numbers for a single key, and eventually, as things deviate in the rush to deliver new features, you begin to see multi-delimited text strings mapped to only one key. For example, in a rush to add home page A/B testing a developer has mapped “A=40,B=30,C=20,D=10” to a single key in the configuration table instead of building a more stable data model to support and track A/B test changes for reporting purposes.

Interestingly enough, and to the delight of the business, A/B testing has become wildly successful. They have since added more tests to our config table, and now they want an application that will allow them to manage the test settings themselves without having to find someone to write SQL for database changes, and they want it now.

The problem with this is that the A/B settings are stored in a configuration table and need to be keyed a specific way or the application will throw an exception when it attempts to read them, and you don’t have confidence the business owner is impervious to the fat-finger. In working out the details to the application you don’t have time to design a new data model for A/B test settings, perhaps using XML, but you want to code something that can be re-used by other applications so you don’t have to repeat yourself, and somewhat limit technical your debt. You decide to code a SQL function that will parse multi-delimited text and present the in name/value pair table format.

I know this is a hypothetical, and somewhat tongue-in-cheek, example of why one might create something that can parse multi-delimited text, but in an unorganized development environment executing off of an unorganized list of business needs from multiple owners who don’t talk with one another, this kind of stuff can happen. Perhaps a more practical example would be parsing query string parameters so they can be stored in the database in a format that can be queried. Regardless of the example, below I walk you through how to parse multi-delimited text using SQL functions, and give you a link to download the code.

Before we dive into the code, you need to know the basic framework. This solution involves three functions:

- parse_text_before_delimited_value – This parses all the text before a specified delimiter. For example, if you had a string that looked like this: A=40,B=30,C=20,D=10 and the specified delimiter was a comma, this function would return: A=40.

- parse_text_after_delimited_value – This parses all the text after a specified delimiter. For example, if you had a string that looked like this: A=40,B=30,C=20,D=10 and the specified delimiter was a comma, this function would return: B=30,C=20,D=10.

- parse_multidelimited_values_to_table – This function uses both of the functions above, along with common table expressions (CTE) and recursion to return a two column table of the names and values of our hypothetical test settings.

Function 1: parse_text_before_delimited_value:

CREATE FUNCTION parse_text_before_delimited_value (

@text_to_parse varchar(50),

@delimiter char(1)

)

RETURNS varchar(50)

AS

BEGIN

DECLARE @local_parsedText varchar(50)

DECLARE @local_delimiterPosition INT

SET @local_delimiterPosition = PATINDEX('%' + @delimiter + '%', @text_to_parse)

IF @local_delimiterPosition != 0

SELECT @local_parsedText = SUBSTRING(@text_to_parse , 1, @local_delimiterPosition-1)

ELSE

SET @local_parsedText = ''

RETURN @local_parsedText

END

GO

This function uses PATINDEX to find the first occurrence a specified delimiter @delimiter, and SUBSTRING is used to return the text that is in front of the delimiter.

Function 2: parse_text_after_delimited_value

CREATE FUNCTION parse_text_after_delimited_value (

@text_to_parse varchar(50),

@delimiter char(1)

)

RETURNS varchar(50)

AS

BEGIN

DECLARE @local_parsedText varchar(50)

DECLARE @local_delimiterPosition INT

SET @local_delimiterPosition = PATINDEX('%' + @delimiter + '%', @text_to_parse)

IF @local_delimiterPosition != 0

SELECT @local_parsedText = SUBSTRING(@text_to_parse , @local_delimiterPosition+1, LEN(@text_to_parse)-@local_delimiterPosition)

ELSE

SET @local_parsedText = ''

RETURN @local_parsedText

END

GO

Like the first function this uses PATINDEX to find the first occurrence a specified delimiter @delimiter, and SUBSTRING, but the starting place is @local_delimiter + 1, and everything after the delimiter is returned.

Function 3: parse_multi_delimited_values_to_table

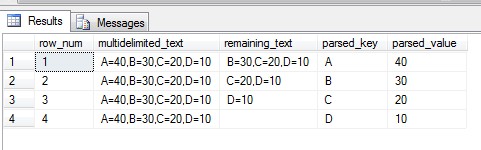

This is where the magic happens, so to speak. The pattern used here is fairly straight forward. Given a string to parse that looks like this: A=40,B=30,C=20,D=10 the function sets up a CTE with 5 columns to store a row number, the text to parse, the remaining text to parse, the parsed key and the parsed value. Using the string above the function first creates this in the CTE:

Row text remaining text parsed key parsed value

1 A=40,B=30,C=20,D=10 B=30,C=20,D=10 A 40

With this first row in place a second select statement acts on the data in the table to continue parsing the name/value pairs, and the UNION ALL enforces recursion to fill out all the rows.

CREATE FUNCTION dbo.parse_multidelimited_values_to_table(

@multidelimited_text varchar(50),

@delimiter1 CHAR(1),

@delimiter2 CHAR(1)

)

RETURNS @outputTable TABLE (

parsed_key varchar(2) NOT NULL,

parsed_value int NOT NULL

)

AS

BEGIN

DECLARE @local_row_num INT;

SET @local_row_num = 0;

WITH parse_multidelimited_table (row_num, multidelimited_text, remaining_text, parsed_key, parsed_value)

AS (

SELECT -- populates first row

@local_row_num + 1,

@multidelimited_text,

dbo.parse_text_after_delimited_value(@multidelimited_text, @delimiter2),

dbo.parse_text_before_delimited_value(dbo.parse_text_before_delimited_value(@multidelimited_text, @delimiter2), @delimiter1),

dbo.parse_text_after_delimited_value(dbo.parse_text_before_delimited_value(@multidelimited_text, @delimiter2), @delimiter1)

UNION ALL

SELECT -- parses first row to populate remaining rows

a.row_num + 1,

a.multidelimited_text,

dbo.parse_text_after_delimited_value(a.remaining_text, @delimiter2),

CASE WHEN LEN(dbo.parse_text_after_delimited_value(a.remaining_text, @delimiter2)) > 0 THEN

dbo.parse_text_before_delimited_value(dbo.parse_text_before_delimited_value(a.remaining_text, @delimiter2), @delimiter1)

ELSE

dbo.parse_text_before_delimited_value(a.remaining_text, @delimiter1)

END,

CASE WHEN LEN(dbo.parse_text_after_delimited_value(a.remaining_text, @delimiter2)) > 0 THEN

dbo.parse_text_after_delimited_value(dbo.parse_text_before_delimited_value(a.remaining_text, @delimiter2), @delimiter1)

ELSE

dbo.parse_text_after_delimited_value(a.remaining_text, @delimiter1)

END

FROM

parse_multidelimited_table a

WHERE

LEN(a.remaining_text) > 0

)

INSERT @outputTable

SELECT parsed_key, parsed_value FROM parse_multidelimited_table

ORDER BY row_num;

RETURN

END

GO

Basically, the first SELECT statement seeds the CT named parse_multidelimited_table with the data for the following SELECT statement to act on in a recursive way. The main difference is that in the first SELECT statement the parameter @multidelimited_text is what’s parsed, but in the second SELECT the column named remianing_text, from the CTE table parse_multidelimited_table (aliased ‘a’) , is what’s parsed. When the two SELECT statements are combined with a UNION ALL you have a recursive expression, the limits of which are determined when the string length of the remaining_text column is zero.

The full CTE looks something like this:

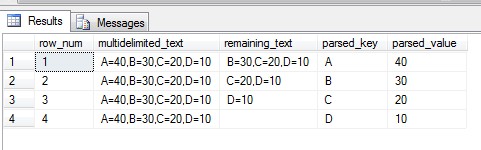

And the real two column output that is presented to the calling app looks like this:

That’s pretty much it. I know this isn’t the best experience, but you can download the complete SQL script including some test code at media fire by following this link:

http://www.mediafire.com/file/mcsb96bwfa5ky7w/multi_delimeted_parsingv2.sql

– t